Features

Research

Technology

Artificial intelligence aided in-tank fish detection in RAS

May 10, 2023 By Rakesh Ranjan, Kata Sharrer, Scott Tsukuda, Christopher Good

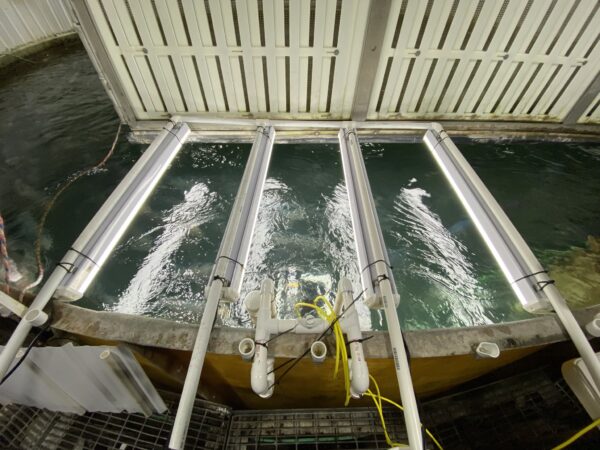

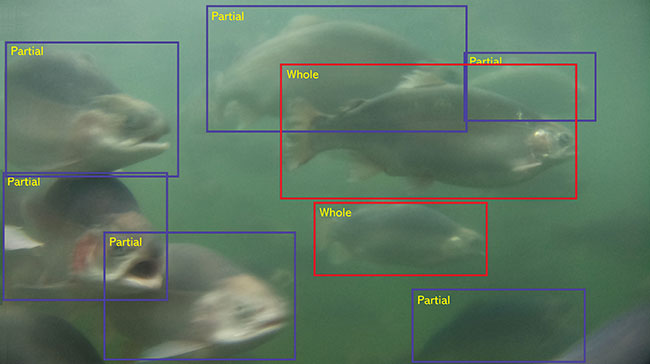

Whole and partial fish detected by the AI-aided fish detection tool developed at the Freshwater Institute (Photo: Freshwater Institute)

Whole and partial fish detected by the AI-aided fish detection tool developed at the Freshwater Institute (Photo: Freshwater Institute) The aquaculture industry is witnessing a shift in management practices as cutting-edge precision technologies are being adopted to improve fish production and product quality. These technologies are enabling farm managers to make data-informed decisions to maintain a suitable rearing environment for optimal fish growth, minimum losses, and high profitability. Rapid advancements in sensing technologies, edge computing, internet of things, artificial intelligence, machine vision, and machine learning are paving the way toward the adaptation of digital technologies for aquaculture.

Artificial intelligence and machine learning can help answer fish production-related questions and assist growers with important management decisions in recirculating aquaculture systems (RAS). Because data quality plays an important role in the accuracy and reliability of machine learning models, data used to train a machine learning model must be sufficient, consistent, accurate, and representative of the system being modelled. Underwater data collection, high fish density, and water turbidity impart major challenges in acquiring high-quality imagery data in RAS environments.

Keeping the importance of data quality in mind, the Precision Aquaculture team at the Freshwater Institute conducted a study to develop an underwater sensing platform that can acquire high-quality imagery data in RAS grow out tanks, suitable to train robust in-tank fish detection models. Additionally, we investigated the effect of sensor selection, imaging conditions, and data size on the performance of the fish detection model.

Underwater sensing platform

Three imaging sensors – Raspberry Pi camera (RPi), Luxonis OAK-D, and Ubiquiti security camera (Ubi) – were selected for this study and integrated with a single-board computer for data acquisition and model deployment. The computers were programmed to acquire images at user-defined frequencies.

The sensor-computer assemblies were installed inside waterproof enclosures, sealed, and vacuum tested to ensure underwater seal integrity. The assembled sensors were attached to a customized PVC cube. An off-the-shelf waterproof GoPro camera (GPro) was also installed on the platform for comparison. The platform was mounted to the tank wall to hold the sensor assembly approximately 1.2 metres below the water surface. Moreover, a supplemental light grid, consisting of four sets of diffused LED tube lights, was fabricated and mounted at the tank top to improve underwater visibility.

Fish detection model

Approximately 8,000 images were collected for model training in ambient light (i.e. only the ceiling light turned on) and supplemental light (i.e. both ceiling and supplemental lights turned on) conditions. The models were trained using images captured with various sensors (RPi, OAK-D, Ubi, and GPro) to detect whole and partial fish in the field of view of the sensor under both lighting conditions.

Mean average precision (mAP) and F1 score are commonly used metrics to evaluate the performance of machine learning models, particularly in the context of object detection and classification tasks. Two different types of object detection models (YOLOv5 and Faster R-CNN) were tested. The performance of the fish detection model trained with various dataset size (100–2000 images) was analyzed using mAP and F1 scores.

Model performance

Despite the high fish density, which resulted in object occlusion in the training images, the developed models effectively detected both partial and whole fish in the field of view of the sensors. The models performed best when trained with 1,000 images. Both YOLOv5 and Faster R-CNN models attained satisfactory mAP and F1 scores.While the best-performing Faster R-CNN achieved a mAP score of 86.8 per cent, the highest score for YOLOv5 model was 86.5 per cent.

The training time for YOLO models, however, was six to 14 times less than the Faster R-CNN models. Therefore, the former model was determined to be relatively efficient for real-time edge computer vision applications and was selected for further investigation.

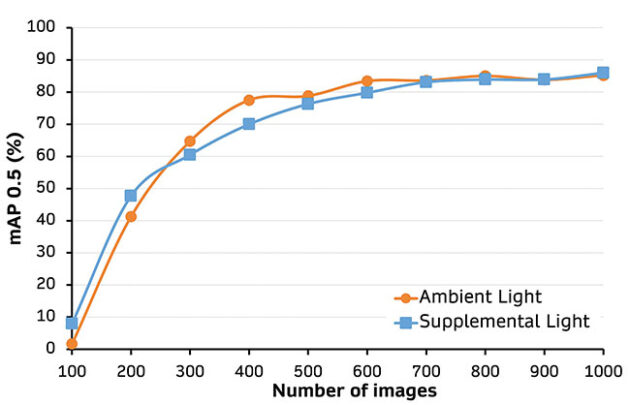

While inspecting the images from each light condition, it was observed that the background fish in the images captured in ambient light conditions had blurry features. Provided supplemental light, foreground and background fish had relatively well-defined features with no blurry edges. Despite relatively poor image quality in ambient light conditions, the ambient and supplemental models performed similarly.

Since the ambient model was trained with a relatively complex dataset and had sufficient data to learn, it performed well on a test dataset captured in similar light conditions.

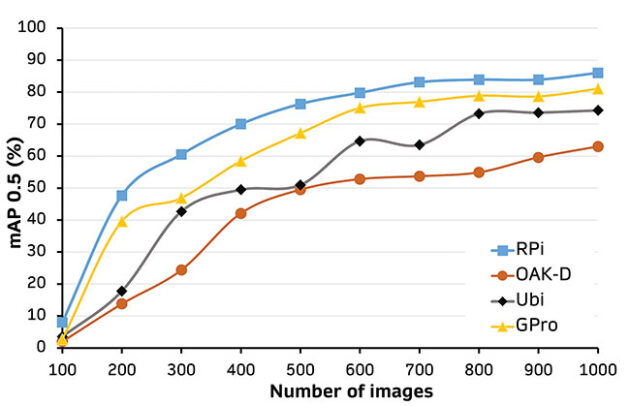

We also learned that sensor selection substantially affected the model performance. In both light conditions, mAP and F1 score for the RPi model was considerably higher than GPro, OAK-D and Ubi models (Figure 2). Additionally, OAK-D and Ubi models had a lower whole-to-partial mAP ratio compared to RPi and GPro.

A lower whole-to-partial mAP ratio indicates that the model performed poorly in detecting whole fish compared to partial fish. This disparity can negatively impact the real-world application of such models, especially for applications like selective fish grading or robotic harvesting, where whole fish detection is critical for decision making.

The RPi model achieved the highest whole-to-partial mAP ratio of 0.9. Furthermore, the number of whole fish annotated for the RPi model was highest due to the larger field of view of the RPi sensor (140º) compared to GPro (120º), Ubi (86º), and OAK-D (69º). The higher number of input features in the RPi training data set perhaps resulted in more robust model performance.

Overall, RPi sensor performed best among the tested sensors and can potentially be adopted to develop computer vision applications for decision support. The optimized model was deployed on a video feed of a camera system to track the real-time movement of individual fish and their swimming pattern. This information can be used to investigate the effect of physical and biological stresses on fish behaviour.

Real-time monitoring of fish activity during a feeding event can also be utilized to optimize fish growth and FCR and to minimize wasted feed through automated adjustment of the feed delivery rate. The detailed outcome of this investigation can be found in our recently published journal article by Ranjan et al. (2023).

Future work

The lessons learned from this study are being utilized to develop the foundation for our next project. The aim of this project is to develop an AI-enabled tool for round-the-clock mortality monitoring and alerting in RAS. This tool shall provide timely insight into the early stages of mortality trends and enable RAS managers to take desired actions to prevent a mass mortality event.

References

Ranjan, R., Sharrer, K., Tsukuda, S., & Good, C. (2023). “Effects of image data quality on a convolutional neural network trained in-tank fish detection model for recirculating aquaculture systems” Computers and Electronics in Agriculture, 205, 107644.

Print this page

Advertisement

- Ace Aquatec unveils first in-water portable prawn stunner

- Industry movers and shakers awarded at Aquaculture Ghana 2023